On Tuesday, October 28th (Eastern Time), NVIDIA CEO Jensen Huang delivered a keynote address at the second GTC conference of the year in Washington, D.C., focusing on technological breakthroughs in 6G, AI, quantum computing, and robotics. Huang emphasized that with Moore's Law failing, accelerated computing and GPU technology have become the core driving force for technological progress.

Regarding the integration of AI and 6G technologies, NVIDIA announced a strategic partnership with Nokia, investing $1 billion to acquire Nokia shares and jointly advance AI-native 6G network platforms. In supercomputing, NVIDIA launched NVQLink technology, which integrates AI supercomputing and quantum computing, connecting quantum processors and GPU supercomputers, and has already garnered support from 17 quantum computing companies. NVIDIA also announced a partnership with the U.S. Department of Energy to build the department's largest AI supercomputer. In the realm of AI factories, NVIDIA will launch the Bluefield-4 processor, enabling AI factory operations.

Furthermore, NVIDIA is fueling the Robotaxi boom by announcing partnerships with ride-sharing pioneer Uber and Chrysler's parent company, Stellantis. Uber plans to deploy 100,000 Robotaxi vehicles powered by NVIDIA technology starting in 2027.

NVIDIA has also partnered with AI star Palantir and pharmaceutical giant Eli Lilly, respectively, to deeply integrate its GPU computing power with enterprise data platforms and pharmaceutical R&D, aiming to drive AI from concept to practical application. These two collaborations, targeting enterprise operational intelligence and drug discovery, respectively, signify an accelerated commercialization of AI technology in complex industry scenarios.

Jensen Huang stated, "AI is the most powerful technology of our time, and science is its greatest frontier." The partnerships announced on Tuesday mark NVIDIA's strategic transformation from a chip manufacturer to a full-stack AI infrastructure provider.

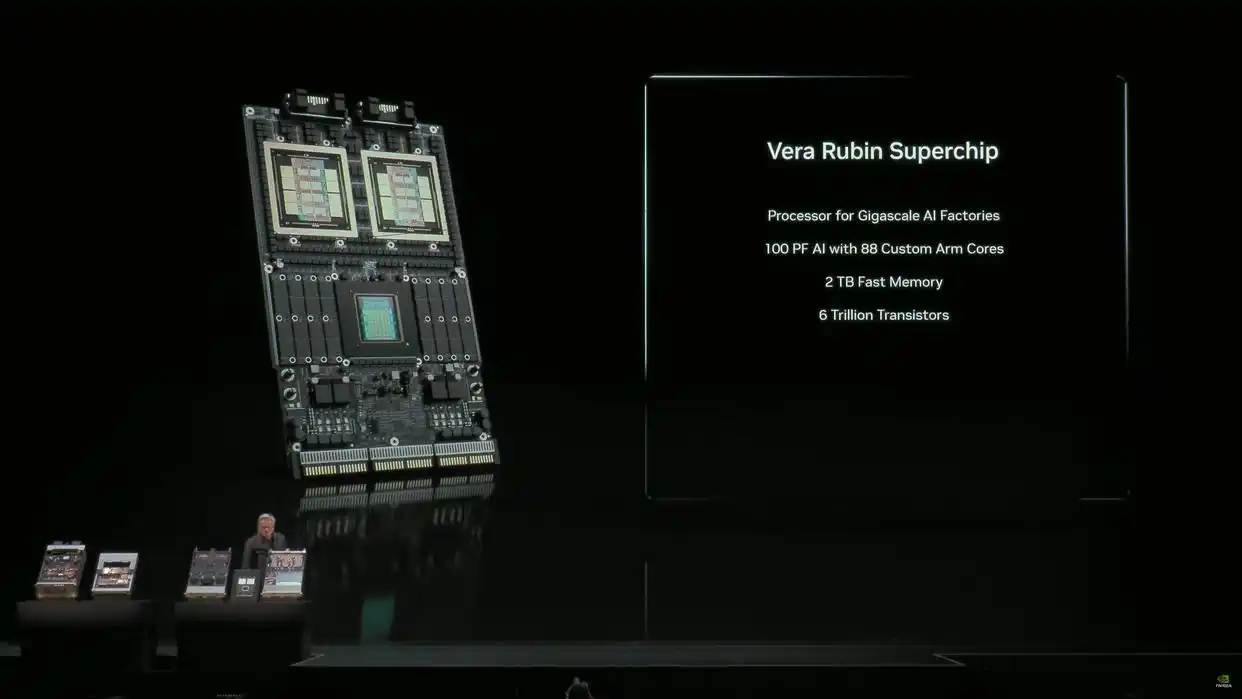

Jensen Huang also showcased NVIDIA's next-generation Vera Rubin super GPU for the first time at the event. Jensen Huang stated that the Rubin GPU has completed laboratory testing, and the first samples have been sent back to the lab from TSMC, with mass production expected by this time next year or earlier. Vera Rubin is a third-generation NVLink 72 rack-mount supercomputer with a cableless connection design. Its single-rack computing power reaches 100 Petaflops, 100 times the performance of the original DGX-1, meaning that work that previously required 25 racks can now be accomplished with a single Vera Rubin.

In his speech, Jensen Huang explicitly refuted the AI bubble theory, stating, "I don't think we're in an AI bubble. We're using all these different AI models—we're using a lot of services and are happy to pay for them." His core argument is that AI models are now powerful enough that customers are willing to pay for them, which in turn justifies the expensive computing infrastructure construction.

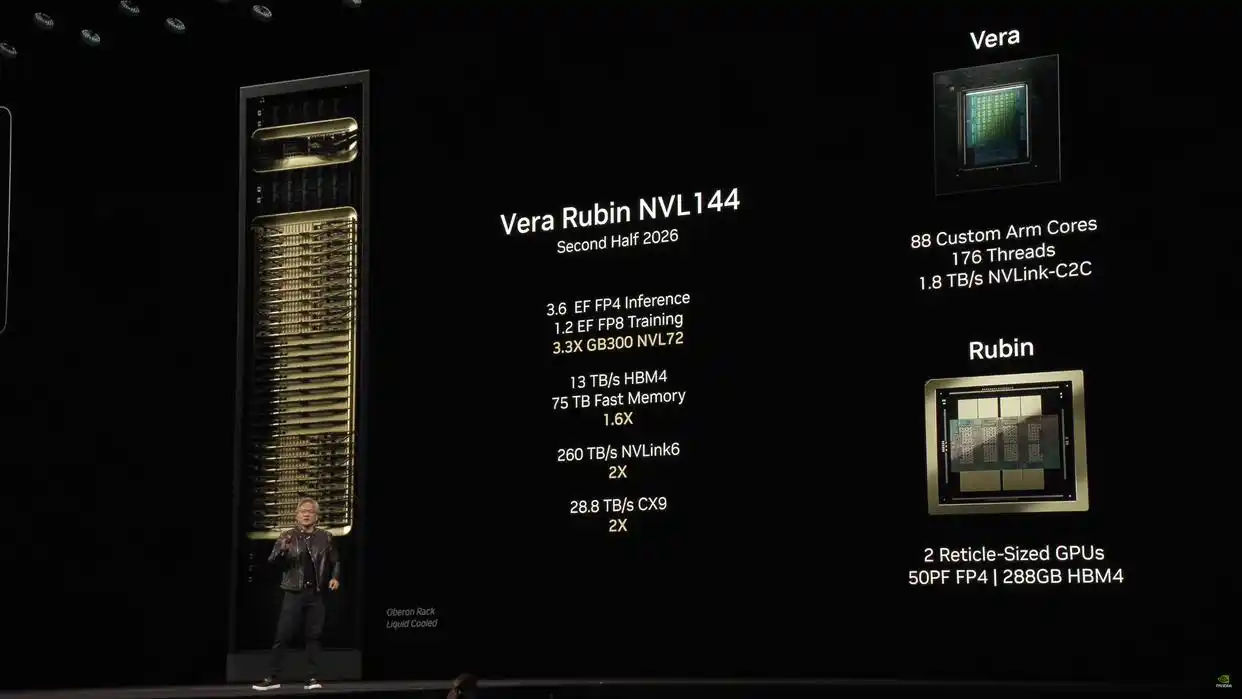

Rubin computing architecture achieves cableless, fully liquid-cooled performance; NVL144 platform performance is 3.3 times higher than GB300

The Vera Rubin computing tray achieves inference performance of up to 440 Petaflops. NVIDIA revealed that the system features eight Rubin CPX GPUs, a BlueField-4 data processor, two Vera CPUs, and four Rubin packages on its base, all eight GPUs featuring a cable-free connection and full liquid cooling design.

The Rubin GPUs utilize two Reticle-sized chips, achieving up to 50 Petaflops of FP4 performance, and are equipped with 288GB of next-generation HBM4 memory. The Vera CPUs use a custom Arm architecture with 88 cores and 176 threads, and NVLink-C2C interconnect speeds up to 1.8 TB/s.

The system's NVLink switch allows all GPUs to transmit data simultaneously, while the Spectrum-X Ethernet switch ensures that processors can communicate simultaneously without congestion. Combined with Quantum switches, the entire system is fully compatible with InfiniBand, Quantum, and Spectrum Ethernet.

The NVIDIA Vera Rubin NVL144 platform achieves 3.6 Exaflops in FP4 inference performance and 1.2 Exaflops in FP8 training performance, a 3.3x improvement over the GB300 NVL72.

HBM4 memory speeds reach 13 TB/s, with a fast memory capacity of 75 TB, a 60% improvement over the GB300. NVLINK and CX9 capabilities are doubled, reaching speeds of up to 260 TB/s and 28.8 TB/s respectively.

Each Rubin GPU utilizes eight HBM4 memory sites and two Reticle-sized GPU dies. The motherboard features a total of 32 LPDDR system memory sites, working in conjunction with the HBM4 memory on the Rubin GPU, with extensive power circuitry surrounding each chip.

The second-generation Rubin Ultra platform will be released in the second half of 2027, expanding the NVL system size from 144 to 576. The Rubin Ultra GPU uses four Reticle-sized chips, achieving up to 100 Petaflops in FP4 performance and a total HBM4e capacity of 1TB, distributed across 16 HBM sites.

The Rubin Ultra NVL576 platform will achieve 15 Exaflops in FP4 inference performance and 5 Exaflops in FP8 training performance, a 14x improvement over the GB300 NVL72. HBM4 memory speeds reach 4.6 PB/s, with a fast memory capacity of 365TB, an 8x improvement over the GB300. NVLINK and CX9 capabilities are improved by 12x and 8x respectively, with speeds reaching up to 1.5 PB/s and 115.2 TB/s.

The platform's CPU architecture remains consistent with Vera Rubin, continuing to use an 88-core Vera CPU configuration.

Chip Shipments Surge, Production Capacity Expands Rapidly

Jensen Huang revealed that NVIDIA's fastest AI chip to date, the Blackwell GPU, is now in full production in Arizona. This means that Blackwell chips, previously only manufactured in Taiwan, can now be produced in the United States for the first time.

Jensen Huang revealed impressive chip shipment figures for Nvidia. He stated that Nvidia expects to ship 20 million Blackwell chips. In comparison, the previous generation Hopper architecture chips only shipped 4 million units throughout their entire lifecycle.

Jensen Huang also stated that 6 million Blackwell GPUs have been shipped in the past four quarters, indicating continued strong demand. Nvidia anticipates that Blackwell and the Rubin chip launching next year will collectively generate $500 billion in GPU sales over five quarters.

Earlier this month, Nvidia and TSMC announced that the first Blackwell wafers had been produced at their Phoenix, Arizona factory. Nvidia stated in a video that Blackwell-based systems will now also be assembled in the United States.

Nvidia Partners with Nokia for 6G Networks

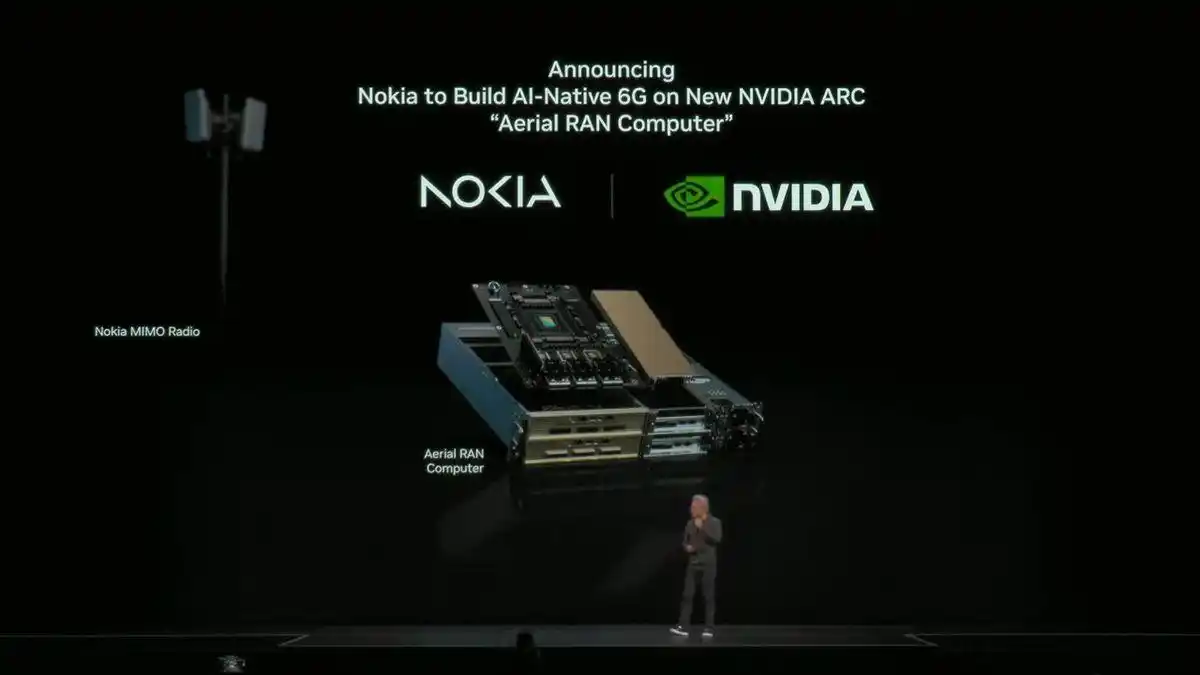

Jensen Huang explained that Nvidia will collaborate with Nokia to launch the Aerial RAN Computer (ARC) to facilitate the 6G network transformation. Nvidia and Nokia will also develop AI platforms for 6G communication technologies.

How will 6G and AI integrate? Beyond AI learning and improving 6G spectrum efficiency, we will also see AI-powered Radio Access Network (RAN) products, or "AI on RAN." This means that while much data currently runs on Amazon Web Services (AWS), Nvidia aims to build a cloud computing platform on top of 6G connectivity. This demonstrates the potential of ultra-fast AI, which can power technologies such as autonomous vehicles.

Nvidia and Nokia announced a strategic partnership on Tuesday (October 28th) to add Nvidia-powered commercial-grade AI-RAN products to Nokia's RAN portfolio, enabling communication service providers to launch AI-native 5G-Advanced and 6G networks on the Nvidia platform.

Nvidia will launch the Aerial RAN Computer Pro computing platform for 6G networks, and Nokia will expand its RAN portfolio based on this, launching new AI-RAN products. Nvidia will also make a $1 billion equity investment in Nokia at a subscription price of $6.01 per share.

Omdia, an analysis firm, predicts that the AI-RAN market will exceed $200 billion by 2030. The collaboration between Nvidia and Nokia will provide distributed edge AI inference capabilities, opening up a new high-growth area for telecom operators.

T-Mobile US will partner with Nokia and Nvidia to drive the testing and development of AI-RAN technology, integrating it into its 6G development process. Trials are expected to begin in 2026, focusing on validating performance and efficiency improvements for customers. This technology will support AI-native devices such as autonomous vehicles, drones, augmented reality, and virtual reality glasses.

NVQLink Connects Quantum Computing and GPU Systems

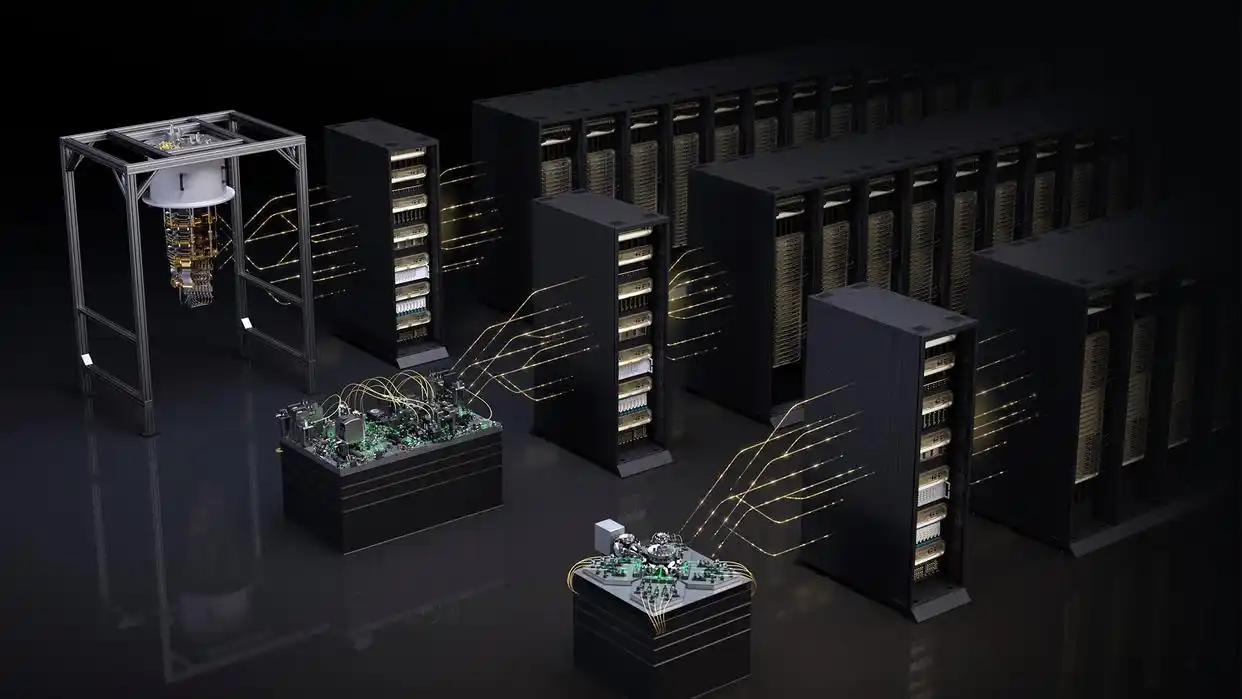

Currently, while various quantum computing technologies offer powerful performance, they are sensitive to environmental noise, limiting their applications. GPU-based supercomputers are particularly useful in this regard, as they can alleviate the burden on quantum processors. Jensen Huang mentioned on Tuesday that Nvidia has built the open-source system architecture NVQLink based on its open-source quantum development platform, CUDA-Q.

Jensen Huang stated that he anticipates quantum computing will require support from traditional processors in addition to new technologies, and NVIDIA will help achieve this goal. "We now realize that directly connecting quantum computers to GPU supercomputers is crucial. This is the future of quantum computing."

NVQLink is a new high-speed interconnect technology that connects quantum processors to GPUs and CPUs. It's not intended to replace quantum computers, but rather to work with them to accelerate quantum computing.

Jensen Huang said that NVQLink technology will help with error correction and calibrate which AI algorithms should be used on GPUs and quantum processors. He revealed that 17 quantum computing companies have already committed to supporting NVQLink. “The industry support is incredible. Quantum computing won’t replace traditional systems; they will work together.”

“It (NVQLink) can correct errors not only for today’s qubits but also for future qubits. We will scale these quantum computers from hundreds of qubits today to tens of thousands, and even hundreds of thousands in the future.”

Nvidia says NVQLink technology has already garnered support from 17 quantum processor manufacturers and 5 controller manufacturers, including Alice & Bob, Atom Computing, IonQ, IQM Quantum Computers, Quantum, and Rigetti. Nine national laboratories led by the U.S. Department of Energy will use NVQLink to drive breakthroughs in quantum computing, including Brookhaven National Laboratory, Fermilab, and Los Alamos National Laboratory (LANL).

Nvidia states that developers can access NVQLink through the CUDA-Q software platform to create and test applications that seamlessly call upon CPUs, GPUs, and quantum processors.

Nvidia and Oracle to Build US Department of Energy's Largest AI Supercomputer

Jensen Huang announced that Nvidia will collaborate with the US Department of Energy to build seven new supercomputers. These will be deployed at the Department of Energy's Argonne National Laboratory (ANL) and Los Alamos National Laboratory (LANL).

Nvidia announced a partnership with Oracle to build the Solstice system, the Department of Energy's largest AI supercomputer, which will be equipped with a record 100,000 Nvidia Blackwell GPUs. Another system, called Equinox, will contain 10,000 Blackwell GPUs and is expected to be operational in the first half of 2026.

Both systems are interconnected via an Nvidia network, providing a total of 2,200 exaflops of AI performance. These supercomputers will enable scientists and researchers to develop and train new cutting-edge models and AI inference models using Nvidia's Megatron-Core libraries and scale using the TensorRT inference software stack.

Energy Secretary Chris Wright stated, "Maintaining U.S. leadership in high-performance computing requires us to build bridges to the next era of computing: accelerating quantum supercomputing. Deep collaboration between our national laboratories, startups, and industry partners like NVIDIA is crucial to this mission."

Argonne National Laboratory Director Paul K. Kearns stated that these systems will seamlessly connect with the Department of Energy's cutting-edge experimental facilities, such as the Advanced Photon Source, enabling scientists to address the nation's most pressing challenges through scientific discoveries.

BlueField-4 Drives AI Factory Infrastructure Upgrades

Jensen Huang believes that proxy AI is no longer just a tool, but an assistant in all of our work. The opportunities brought by AI are "numerous." NVIDIA plans to build factories dedicated to AI, filled with chips.

NVIDIA announced on Tuesday (October 28th) the launch of the Bluefield-4 processor, which supports an AI factory operating system.

NVIDIA's BlueField-4 data processing unit supports 800Gb/s throughput, providing a breakthrough acceleration for gigabit-level AI infrastructure. This platform combines NVIDIA Grace CPUs and ConnectX-9 networking technology, offering six times the computing power of its predecessor, BlueField-3, and supporting three times the scale of AI factories.

BlueField-4 is designed for a new class of AI storage platforms, laying the foundation for efficient data processing and breakthrough performance at scale in AI data pipelines. The platform supports multi-tenant networking, fast data access, AI runtime security, and cloud elasticity, and natively supports NVIDIA DOCA microservices.

NVIDIA states that several industry leaders plan to adopt BlueField-4 technology. These include server and storage companies such as Cisco, DDN, Dell Technologies, HPE, IBM, Lenovo, Supermicro, VAST Data, and WEKA. Network security companies include Armis, Check Point, Cisco, F5, Forescout, Palo Alto Networks, and Trend Micro.

Furthermore, cloud and AI service providers such as Akamai, CoreWeave, Crusoe, Lambda, Oracle, Together.ai, and xAI are building solutions based on NVIDIA's DOCA microservices to accelerate multi-tenant networks, improve data movement speed, and enhance the security of AI factories and supercomputing clouds.

NVIDIA BlueField-4 is expected to launch an early version in 2026 as part of the Vera Rubin platform.

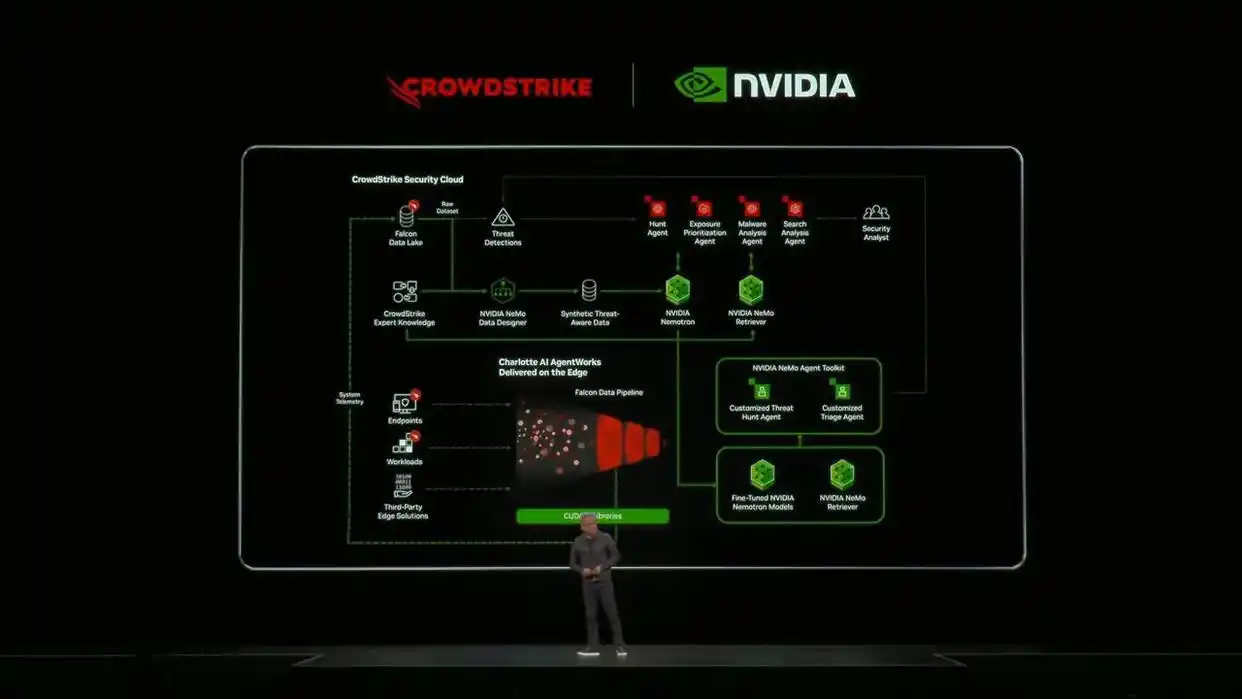

NVIDIA Partners with CrowdStrike for AI Cybersecurity Development

Jensen Huang stated that NVIDIA will collaborate with cybersecurity company CrowdStrike on AI cybersecurity models.

NVIDIA announced a strategic partnership with CrowdStrike to provide NVIDIA AI computing services on the CrowdStrike Falcon XDR platform. This collaboration combines Falcon platform data with NVIDIA GPU-optimized AI pipelines and software, including the new NVIDIA NIM microservices, enabling customers to create customized, secure generative AI models.

According to the 2024 CrowdStrike Global Threat Report, the average breach time has dropped to 62 minutes, with the fastest recorded attack taking just over 2 minutes. As modern attacks become faster and more sophisticated, organizations need AI-driven security technologies to achieve the necessary speed and automation.

Jensen Huang stated, "Cybersecurity is essentially a data issue—the more data an enterprise can process, the more incidents it can detect and handle. Combining NVIDIA Accelerated Compute and Generative AI with CrowdStrike cybersecurity provides enterprises with unprecedented threat visibility."

CrowdStrike will leverage NVIDIA Accelerated Compute, NVIDIA Morpheus, and NIM microservices to bring customized LLM-driven applications to the enterprise. Combined with the unique contextual data of the Falcon platform, customers will be able to address new use cases in specific domains, including processing petabyte-scale logs to improve threat hunting, detecting supply chain attacks, identifying anomalies in user behavior, and proactively defending against emerging vulnerabilities.

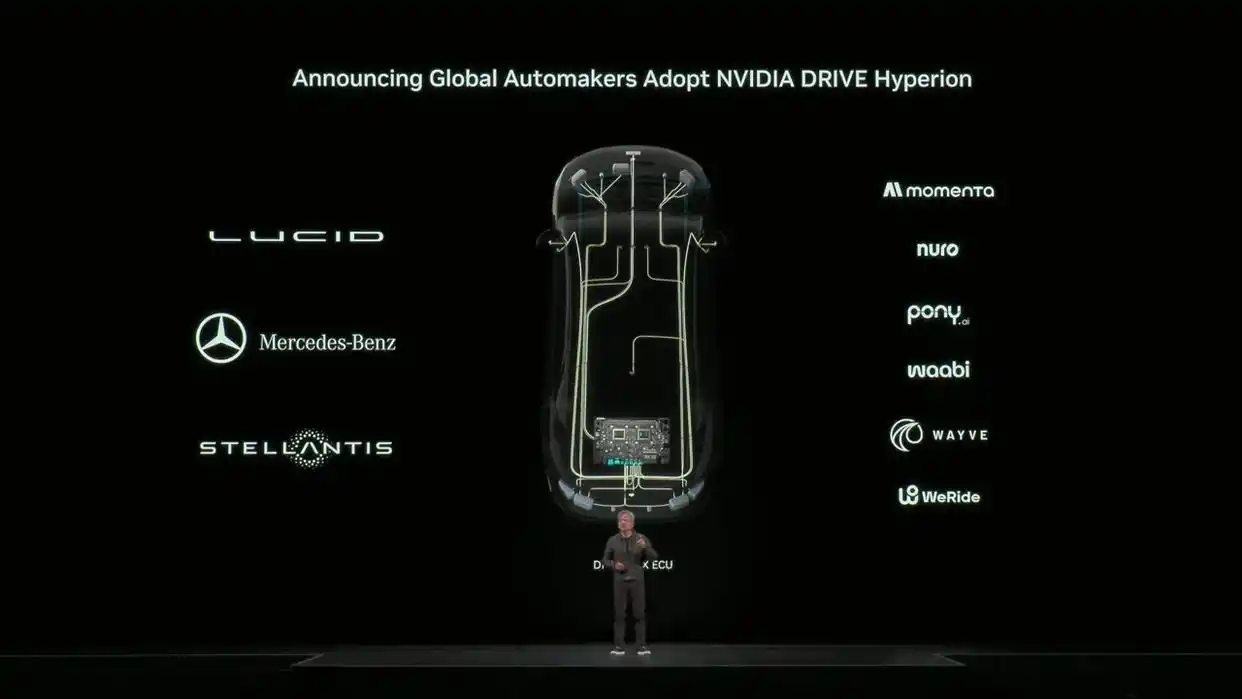

NVIDIA's New Autonomous Driving Development Platform Helps Uber Deploy Robotaxi Fleet

Jensen Huang introduced that NVIDIA's end-to-end autonomous driving platform, DRIVE Hyperion, is ready to launch vehicles providing Robotaxi services. Global automakers, including Stellantis, Lucid, and Mercedes-Benz, will leverage NVIDIA's new technology platform, DRIVE AGX Hyperion 10 architecture, to accelerate the development of autonomous driving technology.

NVIDIA announced a partnership with Uber to expand the world's largest Level 4 mobility network using the next-generation NVIDIA DRIVE AGX Hyperion 10 autonomous driving development platform and DRIVE AV software. NVIDIA will support Uber in gradually expanding its global autonomous driving fleet to 100,000 vehicles starting in 2027.

DRIVE AGX Hyperion 10 is a reference-grade production computer and sensor architecture that enables any vehicle to reach Level 4 readiness. The platform allows automakers to build cars, trucks, and vans equipped with proven hardware and sensors, and can host any compatible autonomous driving software. Jensen Huang stated, "Driverless taxis mark the beginning of a global transportation transformation—making transportation safer, cleaner, and more efficient. Together with Uber, we've created a framework for the entire industry to deploy autonomous vehicle fleets at scale." Uber CEO Dara Khosrowshahi said, "NVIDIA is a pillar of the AI era and is now fully leveraging this innovation to unlock Level 4 autonomous driving capabilities at massive scale."

Stellantis is developing an AV-Ready platform specifically optimized to support Level 4 capabilities and meet the requirements of driverless taxis. These platforms will integrate NVIDIA's full-stack AI technology, further expanding connectivity with Uber's global mobility ecosystem.

Uber stated that Stellantis will be one of the first manufacturers to supply Robotaxi vehicles, providing at least 5,000 NVIDIA-powered Robotaxi vehicles for Uber's operations in the US and internationally. Uber will be responsible for end-to-end fleet operations, including remote assistance, charging, cleaning, maintenance, and customer support.

Stellantis announced a collaboration with Foxconn on hardware and system integration, with production scheduled to begin in 2028. Initial operations will be conducted in the US in partnership with Uber. Stellantis stated that pilot projects and testing are expected to gradually roll out over the next few years.

Lucid is advancing Level 4 autonomous driving capabilities for its next-generation passenger vehicles, using full-stack NVIDIA AV software on the DRIVE Hyperion platform, and delivering its first Level 4 autonomous vehicles to customers. Mercedes-Benz is testing a future collaboration based on its proprietary operating system MB.OS and DRIVE AGX Hyperion, with the new S-Class offering a superior Level 4 luxury driving experience.

NVIDIA and Uber will continue to support and accelerate global partners developing the software stack on the NVIDIA DRIVE Level 4 platform, including Avride, May Mobility, Momenta, Nuro, Pony.ai, Wayve, and WeRide. In the trucking sector, Aurora, Volvo Autonomous Solutions, and Waabi are developing Level 4 autonomous trucks powered by the NVIDIA DRIVE platform.

NVIDIA and Palantir Build Operational AI Technology Stack; Lowe's First to Apply Supply Chain Optimization Solution

The core of the collaboration between NVIDIA and Palantir is integrating NVIDIA's GPU-accelerated computing, open-source models, and data processing capabilities into Palantir's AI Platform (AIP) Ontology system. Ontology creates a digital replica of the enterprise by organizing complex data and logic into interconnected virtual objects, links, and actions, providing the foundation for AI-driven business process automation.

Jensen Huang stated, "Palantir and NVIDIA share a common vision: to put AI into action and transform enterprise data into decision intelligence. By combining Palantir's powerful AI-driven platform with NVIDIA CUDA-X accelerated computing and Nemotron open-source AI models, we are building a next-generation engine to power specialized AI applications and agents running the world's most complex industrial and operational pipelines."

Technically, customers can use NVIDIA CUDA-X data science libraries for data processing through Ontology, combined with NVIDIA accelerated computing, to drive real-time AI decision-making for complex business-critical workflows. NVIDIA's AI Enterprise Platform, including the cuOpt decision optimization software, will support enterprises in dynamic supply chain management. NVIDIA's Nemotron inference model and the NeMo Retriever open-source model will help enterprises quickly build AI agents informed by Ontology.

"Palantir focuses on deploying AI that delivers immediate asymmetric value to our customers," said Alex Karp, co-founder and CEO of Palantir. "We are proud to partner with NVIDIA to integrate our AI-driven decision intelligence systems with the world's most advanced AI infrastructure."

Retailer Lowe's is among the first to adopt the integrated Palantir and NVIDIA technology stack, creating a digital replica of its global supply chain network for dynamic and continuous AI optimization. This technology aims to improve supply chain agility while enhancing cost savings and customer satisfaction.

Seemantini Godbole, Chief Digital and Information Officer at Lowe's, stated, "Modern supply chains are extremely complex and dynamic systems, and AI is crucial for helping Lowe's adapt and optimize rapidly under ever-changing conditions. Even small changes in demand can have ripple effects across the global network. By combining Palantir technology with NVIDIA AI, Lowe's is reimagining retail logistics, enabling us to better serve our customers every day."

NVIDIA and Palantir also plan to integrate NVIDIA Blackwell architecture into Palantir AIP to accelerate the end-to-end AI pipeline, from data processing and analytics to model development, fine-tuning, and production AI. Enterprises will be able to run AIP in NVIDIA AI factories for optimized acceleration. Palantir AIP will also be supported in NVIDIA's newly launched government AI factory reference design.

Eli Lilly Builds Pharmaceutical Industry's Most Powerful Supercomputer Powered by Over 1,000 Blackwell Ultra GPUs

Eli Lilly's collaboration with NVIDIA will build a supercomputer powered by over 1,000 Blackwell Ultra GPUs, connected via a unified high-speed network. This supercomputer will power an AI factory, a dedicated computing infrastructure for the large-scale development, training, and deployment of AI models for drug discovery and development.

According to Diogo Rau, Eli Lilly's Chief Information and Digital Officer, the average time from the first human drug trial to product launch is approximately 10 years. The company expects to complete the supercomputer and AI factory by December and launch it in January. However, these new tools may not yield significant returns for Eli Lilly and other pharmaceutical companies until late 2030. “The things we’re talking about discovering with this computing power right now will really see those benefits by 2030,” Rau said.

“This is truly a new kind of scientific instrument,” said Thomas Fuchs, Chief AI Officer at Eli Lilly. “For biologists, it’s like a giant microscope. It really allows us to do things on such a massive scale that we couldn’t do before.” Scientists will be able to train AI models to test potential drugs in millions of experiments, “greatly expanding the scope and complexity of drug discovery.”

While discovering new drugs isn’t the only focus of these new tools, Rau said it is “where the biggest opportunity lies,” adding, “We hope to discover new molecules that humans could never discover on their own.”

Multiple AI models will be available on Lilly TuneLab, an AI and machine learning platform that allows biotech companies access to drug discovery models trained by Eli Lilly based on years of proprietary research. This data is worth $1 billion. Eli Lilly launched the platform last September to expand industry access to drug discovery tools.

Rau noted that in exchange for access to the AI models, biotech companies will need to contribute some of their own research and data to help train these models. The TuneLab platform employs so-called federated learning, meaning that biotech companies can leverage Eli Lilly's AI models without directly sharing data.

Eli Lilly also plans to use supercomputers to shorten drug development time, helping to get treatments to patients faster. Eli Lilly stated that the new scientific AI agent can support researchers, and advanced medical imaging can give scientists a clearer understanding of how diseases progress and help them develop new biomarkers for personalized care.

%20--%3e%3c!DOCTYPE%20svg%20PUBLIC%20'-//W3C//DTD%20SVG%201.1//EN'%20'http://www.w3.org/Graphics/SVG/1.1/DTD/svg11.dtd'%3e%3csvg%20version='1.1'%20id='图层_1'%20xmlns='http://www.w3.org/2000/svg'%20xmlns:xlink='http://www.w3.org/1999/xlink'%20x='0px'%20y='0px'%20width='256px'%20height='256px'%20viewBox='0%200%20256%20256'%20enable-background='new%200%200%20256%20256'%20xml:space='preserve'%3e%3cpath%20fill='%23FFFFFF'%20d='M194.597,24.009h35.292l-77.094,88.082l90.697,119.881h-71.021l-55.607-72.668L53.229,232.01H17.92%20l82.469-94.227L13.349,24.009h72.813l50.286,66.45l58.148-66.469V24.009z%20M182.217,210.889h19.566L75.538,44.014H54.583%20L182.217,210.889z'/%3e%3c/svg%3e)